In today’s article, we are joined by Iñaki Huerta, who brings us insights from his talk at MeasureCamp Madrid 2024. This blog post, originally published in Ikaue’s blog, is an overview of Huerta’s presentation on Data Quality and Automated Data Validation in GA4. His presentation was highly acclaimed, highlighting the importance of this often overlooked but vital aspect of data analytics.

Thank you again for letting us share it with our audience!

At MeasureCamp Madrid 2024, I presented a talk filled with methodologies and resources aimed at helping fellow analysts with one of the most repetitive and least visible tasks in the profession: data validation and qualification. The talk was very well received, even leading to a room change due to the high level of interest. This was a great sign, showing that, despite not being the most glamorous topic, it undoubtedly concerns and is crucial in the analyst's work.

Below there's a summary of all the details we discussed.

TL;DR: Executive Summary for GA4 Data Validation

To effectively manage Data Quality and perform validation in Google Analytics 4 (GA4), analysts must move from manual checks to automated processes.

- Data Validation is Mandatory: In dynamic environments (GA4, Consent Mode, Server-Side Tagging), data is unstable and must be verified frequently.

- Prioritize the Funnel End: Start validation checks with GA4 and BigQuery reports, and only then proceed backward (GTM, DataLayer) when errors are detected.

- Implement Automation Tools: Utilize Crawlers, RPAs (like Chrome Session Recording, Screaming Frog), and JS Trackers to collect hundreds of data points instantly.

- Leverage BigQuery for Validation: Use GA4's BigQuery connection to create 'flattened' tables and automated alerts for cleaner, unprocessed hit-level data validation.

¿Why is data validation in GA4 a mandatory task for analysts?

The instability of the web and app data ecosystem forces us to validate.

In dynamic websites, measurements often break—sometimes drastically, and other times due to a simple variable deviation that completely disrupts our reports and statistics.

In a world saturated with "Fake News," digital businesses face the threat of "Fake Data," which can be as obvious as large peaks and valleys in trends, or as subtle but still dangerous.

Let’s be clear: with false data, our work is not only useless but also dangerous. That’s why it’s essential to validate our data again and again. A validation process that becomes increasingly slow and tedious as the project grows and becomes more complex.

"During the talk at MeasureCamp Madrid, we confirmed with attendees that the time spent on these validation tasks can shockingly account for more than 40% of our effort." — Iñaki Huerta, CEO of IKAUE

We’re talking about a burden no one wants to see, our company or client does not value it (as they take it for granted), and the analyst is aware of, as he/she sees how opportunities to create value are lost due to the time spent on daily validation.

However, this work is not a choice. Validating and, above all, qualifying the data is mandatory and must be done before any other task. Does everyone validate? No. Lazy people exist and will always exist, but the consequences of not doing so are too many and too impactful to consider not doing it:

- Changes that go unnoticed

- Gaps in the data

- Incorrect measurements that persist over time

- KPIs incorrectly distributed among dimensions

- Inability to trust temporal comparisons (MoM, YoY, etc.)

- Decorated data

- Fake Insights

The new GA4 ecosystem and its complications

The timing doesn’t help. GA4 and its new technologies complicate everything

It's more than evident that the digital ecosystem, with the advent of GA4, has become more technical. Consent Mode, Cookies, Modeling, mobile apps, and the inevitable arrival of server-side tagging complicate measurement.

Just as you’re getting a handle on one new technology, another is already competing to become our new essential measurement tool.

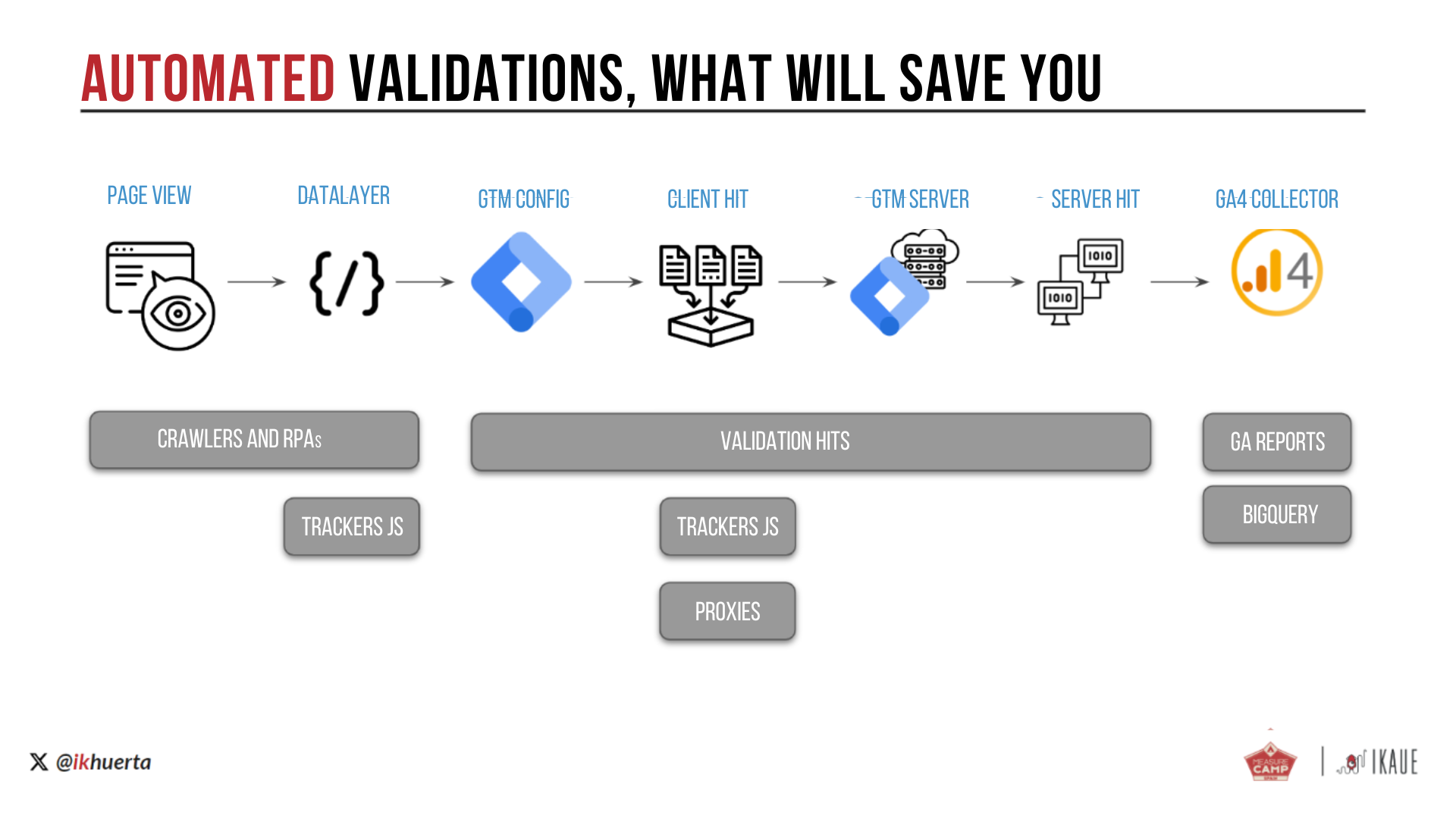

This leads us to a data flow towards GA4 with too many steps. Data must be displayed on a website or screen formalized in a dataLayer, which is then read and interpreted by a client-side tag manager, which generates a data hit to be measured. This hit is then read by a server-side tag manager, which reinterprets it, sending multiple hits from the server to various tools, until the GA4 collector finally captures, stores, and processes it. Without context, this sounds almost like a bad joke, but it’s the reality many of us face and the one we will all encounter soon enough.Understanding the Data Loss Funnel (and manual tools)

Each tool and process adds its value, this is undeniable. If this wasn’t the case, we wouldn’t use them. However, so many steps also weaken the system, increasing the number of points where data might fail or become corrupted.

Understanding the Data Loss Funnel (and manual tools)

If each step in a linear process causes leaks and errors, what we get is a system that analysts are well-acquainted with: a nice funnel. Only, instead of conversions, this one is designed to obtain quality data.

The Data Loss Funnel is a conceptual model that maps the potential points of failure or data degradation from the moment an event occurs on the website/app to its final storage in GA4.

By examining this process in detail, we can enter each step, calculate its impact, and, most importantly, isolate data quality issues at the specific points in the funnel that cause them.

Once everything is structured, we apply our suite of tools—a collection that has gradually become standardized and provides us with detailed information for each step of the data loss funnel.

The suite of tools for manual validation

These are necessary tools, but they all require a person to navigate, review data one by one, and conduct repetitive tests:

¿How can we automate the data collection for validations in GA4?

These systems are great and necessary. But they are all too manual. They require a person to navigate through each page and review data one by one, conduct tests, make fake purchases, and perform any interaction a user might make.

Not only that. These validations are not an isolated or discrete task in our work. It is a repetitive task that we might end up doing every week.

In every project.

For every event.

With every production deployment.

Whenever we doubt our data or observe changes in trends.

Whenever we tackle a new area of analysis.

Every single damn day of our lives.

We cannot stop validating, but we must strive to minimize the time spent on these manual, repetitive tasks. This is where automated validation techniques come into play.

Automated Data Validation refers to the use of software, scripts, or specialized tools to quickly and continuously collect and compare analytics data against expected business rules, minimizing manual effort.

The first approach is to understand this is a funnel and, since the data is interconnected, we are not required to examine every step. A funnel is tackled from the end. In this case, from the data captured in GA4. Only when problems are evident is when we look into key steps. Then we can address the rest of the steps, but this perspective allows us to avoid reviewing a lot of unnecessary information and focus our daily efforts on a few tools: the most effective and efficient ones.

Even so, this time is still too much. Too repetitive. Too many manual data captures. Can’t it be streamlined? This is where automated validation techniques come into play.

Automating data collection for validations

The concept is simple. Validation is necessary. But, aren’t there systems that provide us with a quick, automatic view and/or allow us to validate numerous interactions at once?

Yes, there are! Just like before, we can create a suite of tools designed to automate validations or at least the data collection process.

A suite of tools for automated validation

Crawlers y RPAs (Robotic Process Automation)

These are automatic systems that navigate your website thoroughly, following all links (crawlers) or programming a sequence of navigation actions (RPA). Both systems allow you to collect a large amount of data for validation all at once—hundreds or thousands of data points. This will change your validation approach, as you’ll no longer need to review data point by point but instead, you’ll work with sheets or, better yet, dashboards with the total data.

Among the options that do not require custom development and are available to everyone, we highlight two:

Starting with version 20, Screaming Frog has incorporated custom JS element scraping. On each page that the crawler visits, the JavaScript snippets you define are injected, either to interact with the website or to gather data. In practice, this is very similar to the functions you would use in GTM. In other words, if you know how to create custom tags in GTM, you’ll be able to leverage this functionality effectively.

As an example, here’s a little gift: 3 pre-created scripts dedicated to scraping the dataLayer of a website.

With just these 3 systems, you’ll be able to collect all the dataLayers of a business with thousands or hundreds of thousands of URLs (and even millions, but be prepared to leave your computer running for several days).

Download them here: https://ikaue.com/recursos/fragmentos-custom-js-para-screaming-frog

Here are some additional ideas about what you can do with this technique:

Trigger Validation Hits

This technique allows us to create a small database to facilitate the validation of real data in events that are critical to our business. Essentially, we send hits to our database (Sheets, BigQuery, or whatever you prefer) with key information about what’s happening on our website.

This approach, by comparing this data with real databases or with GA4 itself, will allow us to identify the actual issue causing data not to be saved for certain users or to be saved incorrectly.

Performing this professionally simply requires creating a custom variable in GTM and an endpoint (a URL) capable of receiving and storing this data.

Ideally, this should be done using cloud-based tools. A technically simple example: Create the endpoint with Google Cloud Functions (which will provide you with a URL and connect directly to the Google API) and store the data in Google BigQuery.

Another, more makeshift but much faster method; Create a Google Form and use the form’s destination URL to store all incoming data. Since Google Forms can be connected to Google Sheets and Google Sheets can be connected to BigQuery, the result will be quite similar without having to deal with code.

You can see how to create these types of solutions with Google Forms in this thread I posted on X some time ago.

These solutions will help you avoid many repetitive tests. Just implement them and capture whatever you need from your users.

JS Trackers and Proxies

Very similar to the sniffers and proxies we used manually, but now applied to the actual web browsing. To do this, we’ll count on JS interceptors or a sending proxy in the app. GTM and GA are implemented the usual way, but with these systems, every hit to GA (or any tool) will be captured before it occurs.

In other words, we create a spy for all the analytics we wish to audit. With this data, through dashboards and BigQuery, we can create truly impressive types of automated analysis.

What follows is a screenshot of an analysis we perform at IKAUE. It is a Looker Studio dashboard that shows all the dataLayers, errors, and data submissions to GA sorted by submission time. With it, we can see how hits occur and whether any are duplicated or arrive too late. This is something that would manually require a GA QA expert and quite a bit of analysis time.

Trackingplan as a solution

As an example of commercial trackers, we highlight the great option that Trackingplan can offer. This tool not only captures all data but also creates predictive models that automatically detect inconsistencies or gaps in implementations (e.g., alerting you if the add_to_cart event is missing or a parameter is absent in a certain area of your website). This transforms validation from a manual review into a continuous, automated QA process.

GA/Looker and BigQuery Reports

With GA data reports, we can perform true marvels for validation. Ultimately, this is the vision that matters: if we don’t have data where we expected it, it indicates that the rest of the funnel needs to be reviewed. Our advice: create reports solely for validating GA4.

You can start by using our GA4 validation dashboard. This dashboard is designed for auditing data rather than for viewing trends or conversions.

The connection between GA4 and BigQuery allows us to take a further step. This is because this connection, whether we like it or not, is cleaner and less processed than the information we see in GA4. BigQuery stores hits almost as they arrive, without applying transformations, attribution models, or user ID modeling with Google Signals or consent mode data modeling. This makes creating reports with BigQuery a challenge, but at the same time, it transforms this database into a collection of hits ideal for validating implementations.

With this perspective in mind, we present our methodology, based on 3 different ways to use BigQuery to facilitate validations:

IKAUE's BigQuery Validation Methodology

- Simple Validations: Viewing individual data points using straightforward queries on a 'flattened' BigQuery table.

- Complex Validations: Creating trend queries with conversions to find the data you need.

- Alerts for Incorrect Data: Connecting automation software (N8N, Make, Zapier) to validate daily or at regular intervals that there is no unexpected data in your BigQuery.

For the latter, we use N8N, a technology you can learn about on our blog, as we have a special section on the tool. However, it could also be used with Make, Zapier, or even Notebooks or direct code.

Simple Validations

To work on simple validations, the only issue we need to solve is flattening the BigQuery queries. GA4 tables are somewhat complex. Some fields within them hide collections of structured data that need to be reorganized to view them, which complicates things a bit. Therefore, the first step should be to transform this table into a normal one that can be queried with simple SQL (SELECT * FROM...).

For this, we offer two options:

In both cases, we will achieve a flat table that makes it easy to check values of dimensions, URLs, campaigns, and many other details.

Once we have these flattened, our advice is to apply them to Google Sheets. Google Sheets has long allowed the creation of ‘Connected Sheets,’ which are sheets within a spreadsheet that are actually a connection to a BigQuery table or query. By connecting these simplifying queries to Sheets, we’ll have a system where we can use Sheets/Excel functions to perform validations.

Additionally, Google Sheets’ Connected Sheets have features such as column statistics that will show you all the captured data with its weight and will allow you to create dynamic tables to extract the data you need.

In Conclusion

We need to perform validations, but there is already a world of solutions that can automate this work in some areas. Facing new tools and solutions can be quite time-consuming. It might even take a significant amount of hours to set up these initial automations. But the good thing about this process is that each step you take now will free you from future workload.

Being able to validate without having to manually navigate the web over and over again, or to run GA or GTM debugging, is possible. The trick is to establish a path— a roadmap that allows you to periodically free up and streamline validations a bit more. It will take the time needed to reach an optimal point, but each step will give you more and more free time to focus on what you truly love: data analysis and gaining insights.